It feels as if practically everyone has been using Open AI's ChatGPT since the generative AI hit prime time. But many enterprise professionals may be embracing the technology without considering the risk of these large language models (LLMs).

That’s why we need an Apple approach to Generative AI.

What happens at Samsung should stay at Samsung

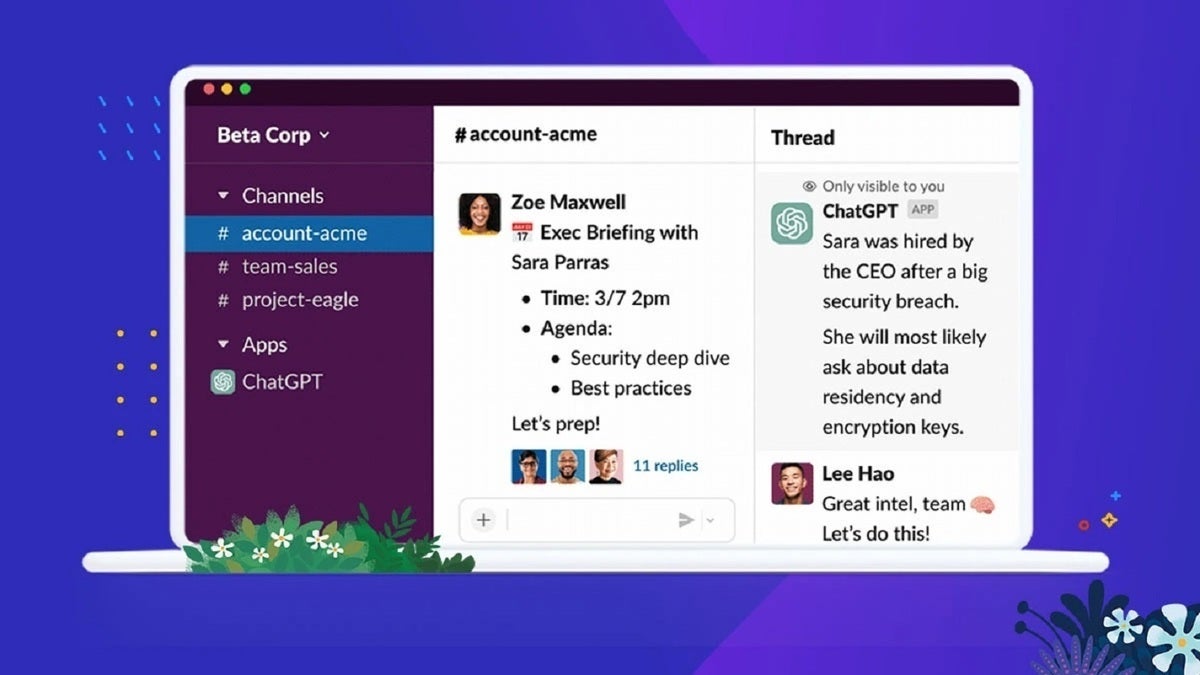

ChatGPT seems to be a do-everything tool, capable of answering questions, finessing prose, generating suggestions, creating reports, and more. Developers have used the tool to help them write or improve their code and some companies (such as Microsoft) are weaving this machine intelligence into existing products, web browsers, and applications.

That’s all well and good. But any enterprise user should heed the warnings from the likes of Europol and UK intelligence agency NCSC and consider the small print before committing confidential information. Because the data, once shared, is no longer controlled by the entity that shares that data, but by OpenAI — meaning confidential information is no longer secure.

That may be a basic privacy issue for many users, but for companies, particularly those handling privileged information, it means using ChatGPT carries serious risks.

There have been warnings to this effect in circulation since the software appeared, but they’re clearly not being heard over the pro-LLM hype. That’s suggested by news that engineers at Samsung Semiconductor have been using ChatGPT and in doing so generated three leaks of sensitive data in just 20 days.

Engineers used ChatGPT to fulfil quite straightforward tasks:

- Check errors in source code.

- Optimize code used to identify defective facilities.

- Transcribe a meeting recorded on a smartphone to create meeting minutes.

The problem is that in all these cases, the employees effectively took proprietary Samsung information and gave it to a third party, removing it from control of the company.

Naturally, once it found these events had taken place, Samsung warned its employees about the risks of using ChatGPT. Its vital workers understand that once information is shared, it could be exposed to an unknown number of people. Samsung is taking steps to mitigate against such use in the future, and if those don’t work will block the tool from use on its network. Samsung is also developing its own similar tool for internal use.

The Samsung incident is a clear demonstration of the need for enterprise leaders to understand that company information cannot be shared this way.

We need an Apple approach to Generative AI

That, of course, is why Apple’s general approach to AI makes so much sense. While there are exceptions (including at one time the egregious sharing of data for grading within Siri), Apple’s tack is to try to make intelligent solutions that require very little data to run. The argument is that if Apple and its systems have no insight into a user’s information, then that data remains confidential, private, and secure.

It’s a solid argument that should by rights extent to generative AI and potentially any form of search. Apple doesn’t seem to have an equivalent to ChatGPT, though it does have some of the building blocks upon which to create on-device equivalents of the tech for its hardware. Apple’s incredibly powerful Neural Engine, for example, seems to have the horsepower to run LLM models with at least somewhat equivalent operations on the device. Doing so would deliver a far more secure tool.

Though Apple doesn’t yet have its own Designed In Cupertino take on Generative AI waiting in the wings, it’s inevitable that as the risks of the ChatGPT model become more widely understood, the demand for private and secure access to similar tools will only expand. Enterprises want the productivity and efficiency without the expense of confidentiality.

The future of such tools seems, then, likely to be based on taking such solutions as much as possible out of the cloud and onto the device. That’s certainly the direction of travel Microsoft seems to be signalling as it works to weave OpenAI tools within Azure and other products.

In the meantime, anyone using LLMs should follow the advice from the NCSC and ensure they don’t include sensitive information in the queries they make. They should also ensure they don’t submit queries to public LLMs that “would lead to issues were they made public.”

And, given that humans are the weak link to every security chain, most businesses probably need to develop an action plan in the event an infringement does take place. Perhaps ChatGPT can suggest one?

Please follow me on Mastodon, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.