Having announced plans in May to build generative AI into its collaboration application, Slack now expects to make its the new features available to select customers as part of a pilot this winter, with a full rollout next year.

The company has been experimenting with the genAI internally for several months to identify the best ways of integrating the technology for collaboration purposes. The initial focus is on three main use cases designed to save users time by automating certain processes.

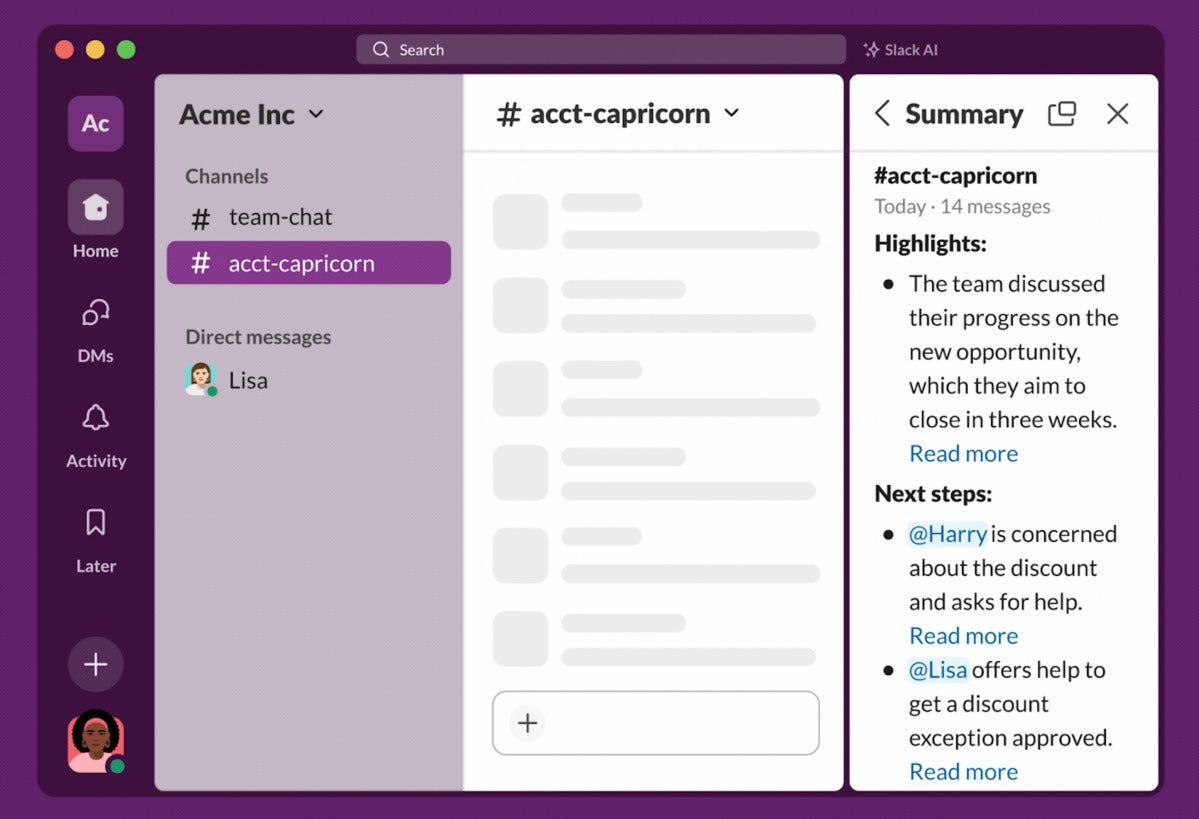

One is the ability to summarize conversations that take place in Slack channels and threads.

“People end up having these super long conversations in threads; hundreds of messages,” said Ali Rayl, senior vice president of product management at Slack. “The idea is you click a button, AI runs through the thread and says, 'Here's what happened in the last five hours.' And instead of reading the whole thread, you get a nice little summary.”

Another similar feature is a planned channel “recap,” providing a user with a quick summary of conversations within a particular team channel. If a user has been away for a couple of weeks — or even just a day or two — and doesn’t want to trawl through a barrage of messages that may or may not be relevant, the AI tool can provide an overview of what’s been discussed.

Finally, Slack wants to use genAI to generate better answers when users search for information in the app. “Slack has always had search — we do our best to surface messages and files related to your search,” said Rayl. “But using generative AI, we can take this one step further — you can ask a question and we can go through all the information that your company has in Slack and give you an answer to that exact question.”

A user could, for example, ask Slack for the rollout date for a product and immediately receive a specific date, rather than having to scroll through a list of related posts and links to documents in search results to find the information.

Slack

Slack

Slack is adding generative AI tools that can be used to more quickly summarize team chats, huddles, and even documents to surface needed information quickly.

GenAI could make collaboration more productive

The ability to access genAI functions directly from a collaboration application should save users time, said Kim Herrington, a senior analyst at Forrester.

“Typically, you want to be able to leverage genAI in the places and spaces where people are already working and communicating to maximize user adoption,” said Herrington. This can help a user more quickly move “from knowledge seeker to insights” — and ultimately to action, she said.

Slack isn’t alone in weaving AI into its software. Google and Microsoft, which also sell team collaboration applications, have been pushing ahead with their own genAI pilot projects with customers, for instance, and, in the case of Google, already bringing the technology to market.

These moves mark the beginning of the “next phase, or evolution,” in group chat-centric workspaces, said Mike Gotta, research vice president at Gartner.

The freeform nature of collaboration tools — which are used to share information, coordinate work, and maintain awareness of work activity — can lead to a lot of noise, Gotta said, with users struggling to find relevant information in the applications at times. Generative AI can help, he said, by automating processes touncover “hidden insights related to people, topics, files, tasks, and create a synopsis of what the group has been discussing.”

Generative AI can also help new team members quickly re-engage with teammates when they return from a different task — another challenge users face when accessing collaboration apps. “When you ‘leave the flow’it’s hard to catch up,” he said.

There are benefits for managers or team leaders, too, Gotta said. “For those that are overseeing the work, GAI[generative AI] can provide a self-service means of gaining awareness of the overall team deliverable and even the well-being or concerns that the team has expressed through their chat posting,” he said.

Tackling genAI ‘hallucinations’

Along with the various benefits of generative AI in collaboration apps, there are drawbacks to using large language models (LLMs) in a business context. LLMs are prone to hallucinations — the output of incorrect information in response to user prompts — and for Slack, acknowledging the problem has been part of the genAI development process of.

One tack the company has taken is to provide transparency via citations or links to where information in a conversation summary is derived. This is done with a “read more” button that returns the user to the original message and channel that generated the summary.

“Oftentimes, a summary is actually a synthesis of five or six different messages,” said Rayl. “We'll give you links to all of those, so you can go back and double check the accuracy — it's very easy to see where all of this comes from. This is one way that we are guarding against hallucinations.”

And if the AI can’t provide an attribution and a source, the tool won’t generate a response to avoid errors.

Slack also looks to be intentionally selective about where and when genAI is used within its app. Hallucinations are more common when AI is given too little data, Rayl said, which means that summaries of short conversations in huddles — Slack’s voice and video tool — are not considered a good fit. If a huddle is just a couple of minutes long, for example, the AI might just invent parts of a conversation to provide a summary. That’s why limits on user interaction with AI make sense, she said.

“Those are the kinds of restrictions that we're looking to find…, where we know that the AI is going to fail, and how do we put guardrails in so that people just don't get into that situation? So, in this case, it's like, 'If the huddle is only this long, the AI isn't available, as there's not enough to summarize.'”

Still, the LLM tool has proven reliable for Slack’s use cases, said Rayl, especially for questions that have factual answers.

“We obviously have a lot of information in Slack — we use Slack a lot to get our work done — but we are just one company, so we need to work with our customers to figure out: Is this working for them? If not, why not make it better, and then roll it out to everybody once we're confident we have a really good product,” said Rayl.

The customer pilot program is expected to allow Slack to identify issues and hone the user experience before a widespread release.

Collaboration, AI, and data privacy

With limited enterprise-grade tools to date, genAI has essentially existed as a form of “shadow IT” since arrived last year, with employees accessing services such as OpenAI’s ChatGPT. Doing so risks sending sensitive corporate data to another company’s servers.

One of the advantages of building in genAI features natively in collaboration apps is that data should remain under control of the customer organization.

“They [user queries] are not being used to train somebody else's model,” said Rayl. “They're not even being used to train the model that we run. We're running a self-contained LLM that ensures data isn't leaking between customers and data isn't going out to the internet.”

Rayl also noted that Slack’s AI won’t allow employees to access conversation data they don’t ordinarily have access to in their workspace. The same goes for files and documents available to users.

“[The AI] has access to the same data that our existing search experience has access to,” Rayl said. “So, if I run a search for something that is in [a colleague’s] DMs, I cannot see it. But if I run a search for something that's in my DMs I can. Same with private channels: if you have access to it, AI can search it; if you don't, [it] can't.”

Essentially, it’s the same Slack search experience but “with a layer of AI to make sense of, synthesize, and answer questions,” she said.

“The world of [generative AI] is [at the] very early stage, so concerns about security, compliance, privacy, and employee adoption are going to remain high on the list of concerns for organizations,” said Gotta. “It will take time to normalize GAI, reduce concerns over accuracy, and address any related pricing versus return on value issues.”